When it comes to harnessing the power of data in the ever-evolving landscape of technology, understanding the architecture and dataflow in a Data Lakehouse-based solution is crucial. In this video and blog post, we’ll explore the key components and processes involved, with focus on Microsoft Fabric.

Ingest, Store, Prepare, Serve

At the heart of a Data Lakehouse, four primary phases unfold: Ingest, Store, Prepare, and Serve. These phases are like the gears of a well-oiled machine, seamlessly managing data as it flows through the system.

Separated Compute and Storage

One of the key features of this architecture is the separation of compute and storage. Compute, in the form of the Spark engine, is used for data preparation, while storage is in OneLake.

Ingest Data Raw from the Sources

Raw data from various sources is ingested into the files section of a “Landing” Lakehouse item. This initial step can be facilitated by the reliable good old Data Factory Pipelines, making sure that data enters the system seamlessly. Additionally, OneLake offers shortcuts for both external and internal data sources, while Spark notebooks can handle data from API’s etc. Fabric also have Real-Time Analytics, adding a dynamic ingesting mechanism to the dataflow.

Preparation, Cleaning, and Validation

Data often needs to go through preparation, cleaning, and validation processes. This is where one or more notebooks come into play, with PySpark being a preferred choice for these tasks. The cleaned data is then saved in the Delta Lake format, ensuring data integrity and reliability.

Business Level Transformation

After the data is cleaned and ready, the next step involves business-level transformations. This is where data is converted into dimensions and facts, making it more meaningful and insightful. Similar to the preparation phase, one or more notebooks are employed here, with Spark SQL being the preferred language.

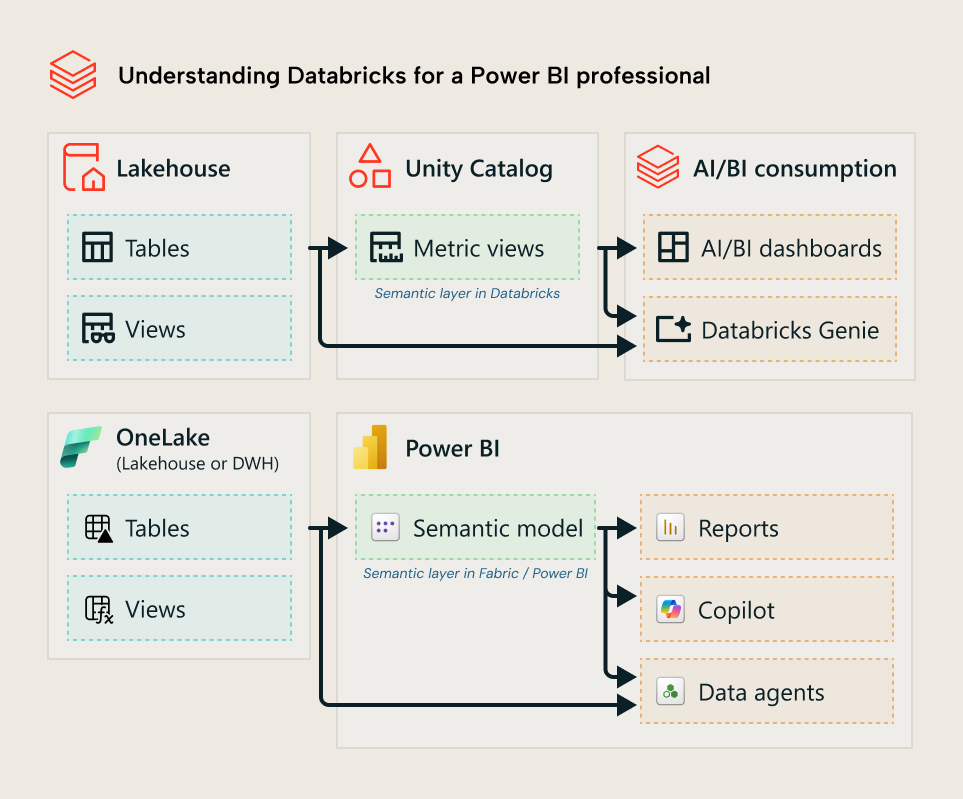

Serving Data to End Users

The ultimate goal of any data system is to serve valuable insights to end users. In the Data Lakehouse, this is achieved through a Power BI Semantic data model in Direct Lake mode. Alternatively, the SQL Endpoint can be used to make the data accessible to users in a more traditional manner.

Orchestrating the Dataflow

To keep this intricate system in sync and ensure a smooth dataflow, Data Factory Pipelines come into play. They act as the orchestrators, managing the movement of data through the various phases.

The Curated Layer and Data Warehouse Option

As a final note, it’s worth mentioning that the “Curated layer” can be replaced with a Data Warehouse item when needed. This can be particularly valuable during migrations from classic SQL database-based Data Warehouses. In such scenarios, T-SQL stored procedures can be used for the business level transformations.

In conclusion, the architecture and dataflow of a Data Lakehouse in Microsoft Fabric offer a powerful and flexible solution for managing data at scale. By understanding the key components and processes involved, organizations can harness the full potential of their data to drive informed decision-making and innovation.