If you want to follow along and get an understanding how your Power BI tenant is used, then it’s crucial to get your hands in all the activity events in the Power BI Audit Log. You basically have two options. First is the unified audit log in the Microsoft 365 Admin Center that you can access with a link from the Admin portal: https://app.powerbi.com/admin-portal/auditLogs

This is fine if you want to search for specific events, but not if you want to get a full overview. Also the audit log require some hefty permissions that most users don’t have. Furthermore you only have 90 days of history.

Thankfully we have a better option, as we can extract the data using either the REST API or PowerShell cmdlet. This way we can build up the history yourself and make reports on all the data. Microsoft and community members have created nice and powerful solutions. Here are a couple of examples:

- How you can store All your Power BI Audit Logs easily and indefinitely in Azure, Gilbert Quevauvilliers

- Power BI Log Analytics Starter Kit, Nassim Kasdali

- Build Your Own Power BI Audit Log; Usage Metrics Across the Entire Tenant, Reza Rad

- Usage Monitoring with the Power BI API – Activity Log (Audit Log) Activity Event Data, Jeff Pries

So you might ask yourself: Why another solution? Great question indeed! My quick answer: Because I want to do it more simple and I want to use the prefered tool for data extraction and ingestion: Azure Data Factory. This way I can easily set up a schedule and ingest the data where needed – Data Lake Storage, SQL database or any of the other +80 destinations (sinks) supported.

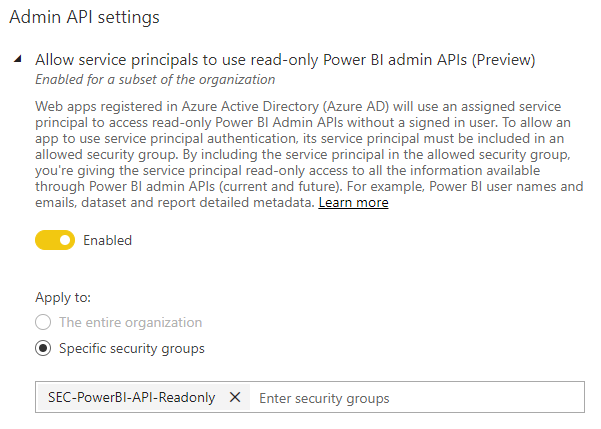

This option is, in my opinion, superior as you can also handle the authentication quite easily with the new “Enable service principal authentication for read-only admin APIs” that’s currently in preview + you don’t need to create and handle a App Registration.

- You start by creating a new Security Group in Azure Active Directory

- Then you add the “Managed identity” (service principal) of your Data Factory ressource as a member of the security group

- And finally you add the security group to the Admin API tenant setting in the Power BI admin portal

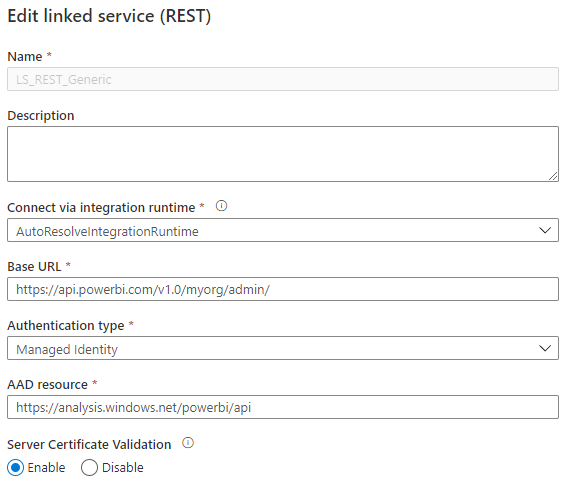

With these three steps handled, you now have full access to call and read all the Power BI REST APIs from your Data Factory ressource using the MSI authentication method. Easy? Next step is then to create a Linked Service of the type REST with these settings:

- Base URL: https://api.powerbi.com/v1.0/myorg/admin/

- Authentication type: System Assigned Managed Identity

- AAD resource: https://analysis.windows.net/powerbi/api

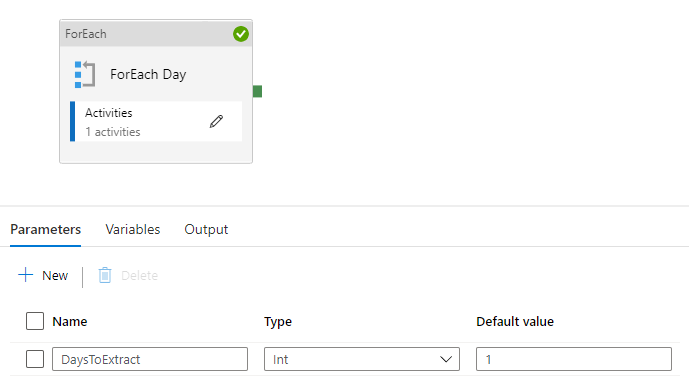

And finally create a pipeline that calls the “Get Activity Events” REST API with the right set of URI parameters (startDateTime and endDateTime) + handle the continuationToken. This is fortunately also pretty easy if you already have some basic Data Factory skills. The API only supports extracting up to one day of data per request, so I have created a parameter called “DaysToExtract” and start with a ForEach activity. If I set the parameter to 1, it will extract yesterday’s data and setting it to 30 will extract the last 30 days. This is unfortunately the maximum you can get out of this API and therefore extremely important that you build up the history yourself.

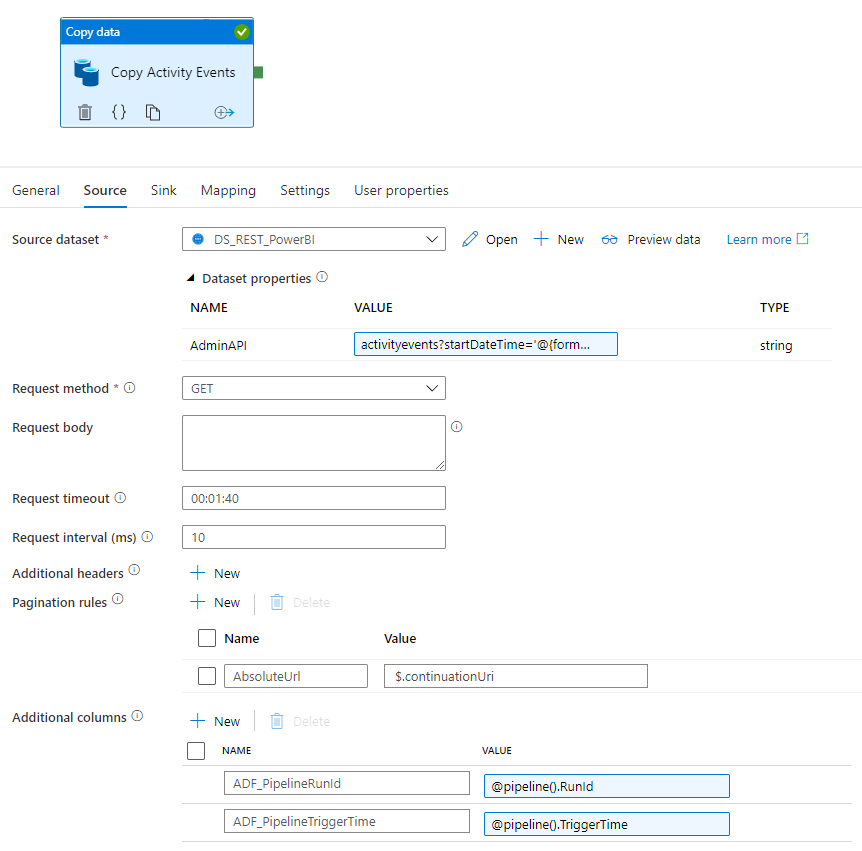

The ForEach activity is setup with this as the Items in the settings: @range(1,pipeline().parameters.DaysToExtract) as it creates an array that the activity can loop over. Inside we just need a plain simple Copy Activity with a source dataset of REST that points to the Linked Service. I have created a dataset with a parameter to the Relative URL, so I can reuse it to call other AdminAPI’s

The value is setup with dynamic content to handle the startDateTime and endDateTime URL parameters based on the pipeline parameter, that comes from the array: activityevents?startDateTime='@{formatDateTime(getPastTime(int(item()), 'Day'), 'yyyy-MM-dd')}T00:00:00.000Z'&endDateTime='@{formatDateTime(getPastTime(int(item()), 'Day'), 'yyyy-MM-dd')}T23:59:59.999Z'

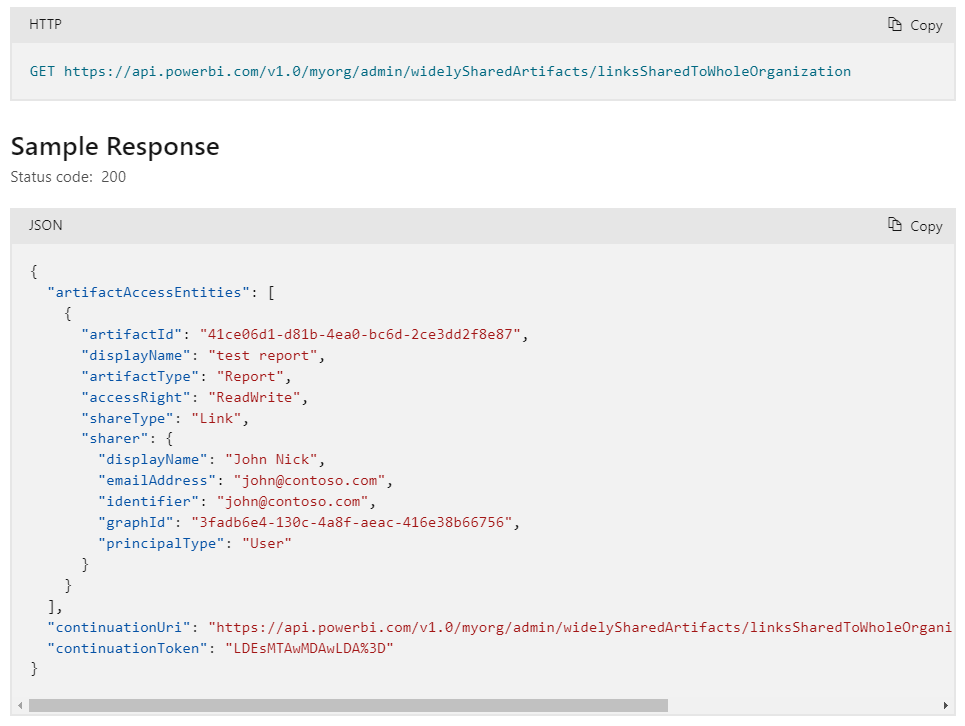

The continuationToken is handled with a simple AbsoluteUrl “Pagination rule” and finally the dataset is enriched with two additional columns to help with lineage, so you can couple the files/rows back to when and how it was extracted.

Next up is deciding your Sink (destination) where I choose a Azure SQL database because it gives we some flexibility to analyze the data straight away and it’s easy to import into a Power BI dataset. The downside is that I need to setup mapping and then need to figure out all the possible activityEventEntities in the returned JSON. I have identified 53 but as it’s not documented anywhere, I might have missed some and there will possible come new Entities in the future, that I will then miss to extract… To overcome this, another solution is storing the entire JSON document in the Azure SQL database or a blob storage / data lake storage. I will let it be up to you to choose your preferred solution.

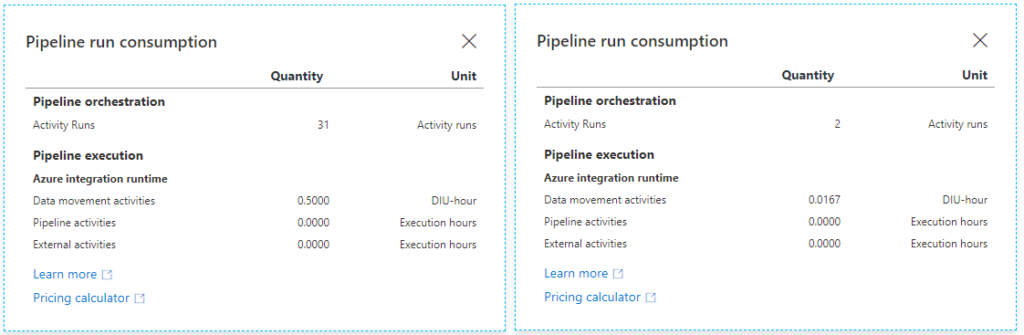

I hear a lot of people claim that Data Factory is expensive. And yes, it can be, but not always and certainly not in this case. If you run this pipeline with DaysToExtract set to 30, it will do 31 activity runs and 0.5000 DIU-hours. This is a total price of around $0.15. This pipeline is also very quick and runs at around 30 seconds as it calls the API in parallel.

If you only extract one day of data, which is the normal use case when running the pipeline nightly, then you will have 2 activity runs and 0.0167 DIU-hours. This translates to $0.005 per run.

I have saved my pipeline as a custom template – free for you to use and modify as you like ? To import, you will have to use the “Pipeline from template” functionality and then click on either “Use local template” or “Import template” depending on, if you have connected your data factory to a code repository. The template includes both the pipeline and the two datasets, so you will only need to create the linked services yourself.

It’s uploaded to a GitHub repo, that I plan to extend with further pipelines that extract valuable data from the Power BI REST API’s. The next interesting part is all the artifacts you can get from the new WorkspaceInfo API. This includes Lineage and Endorsements details that’s not available in any other API as well as list of workspaces, reports etc. Hopefully I will have a blogpost ready soon – the pipeline is already created ?

UPDATE 2021-02-23

After popular demand, I have now created an additional pipeline with Azure Data Lake Storage as the destination. Saving as JSON in a hierarchical folder structure. Download the pipeline template here: https://github.com/justBlindbaek/PowerBIMonitor/blob/main/DataFactoryTemplates/PL_PowerBIGetActivityEvents_ADLS.zip

Excellent blog and tutorial. I am not experienced with ADF and I am able to create a working pipeline following your instruction. My wishlist is a way to define the output json file name so that it reflects the date of activity covered in the file. This will help with managing the json files in the data lake and avoid duplicated files if the pipeline is run multiple times. Would this be possible to include in your template? FYI I am going with the data lake option to store entire log data, to be cleaned and filtered later.

Glad that you can use it. I have uploaded a new template with Azure Data Lake Storage as the destination where each file gets it own name and is placed in a hierarchical folder structure: https://github.com/justBlindbaek/PowerBIMonitor/blob/main/DataFactoryTemplates/PL_PowerBIGetActivityEvents_ADLS.zip

Pingback: Extracting Power BI metadata with Data Factory (part 1) – justB smart

Hi Followed this blog very clear and well explained. I am pretty new to ADF, and first time using a rest API as a data source, I have it sort of working. ADF is sinking data into my SQL server but only fields

continuationUri,continuationToken,lastResultSet,ADF_PipelineRunld,ADF_PipelineTriggerTime.

I think the source json activityEventEntities is empty as this isn’t returned, I have looked in the tutorial and don’t think I have missed anything, is their something else I need to do ?

many thanks

I was actually also struggling with this in the start. It was me not getting the the format correct on the Admin API URL when passing in the startDateTime and endDateTime URL parameters. But I don’t suppose you have change anything in this?

Try changing it to this: activityevents?startDateTime=’@{formatDateTime(getPastTime(int(item()), ‘Day’), ‘yyyy-MM-dd’)}T00:00:00.000Z’&endDateTime=’@{formatDateTime(getPastTime(int(item()), ‘Day’), ‘yyyy-MM-dd’)}T23:59:59.999Z’. I have just put in a int() cast, that means you can use the “Preview data” feature on the source dataset. Then you can see what is returned from the REST call without needing to debug or trigger the pipeline. This could help you debug.

Really Cool article, Keep up the great work sir.

Pingback: T-SQL Tuesday #137 - Jupyter Notebooks for the win? - Benni De Jagere

Great blog article. Tried using the data factory before but couldn’t get the connection right. This helps a lot.

Thank you so much for sharing this, really useful. I have a question here, when we are accessing data through this api, is it going to pull data out of Power BI activity log or Unified audit log? And, do we require permissions at Tenant level for this or is it fine if we create a security group and add this group in Power BI admin portal Tenant settings? Thank you Again.

Hi Ananth. This solution uses the Power BI Activity log, so it’s enough with the security group added in the Power BI Admin Tenant settings as you suggest.

Thanks a lot for the post. I am trying to understand the data, when the Activity=ViewReport would you or anyone else know what it means when ActivityID=00000000-0000-0000-0000-000000000000 when the RequestID is filled in with a lot of numbers.

Sorry. No I don’t know what this means.

Thank you for the article, this is very helpful

Great blog, managed to get this working to bring in 30 days of data then working on a trigger to load daily data 🙂 Thank you!

Quick question – is there a way to amend this to bring in a specific time period of 30 days, instead of only the past 30 days? e.g. if I want to go back a bit further to get historical data?

Thanks 🙂

Unfortunately the API only exposed 30 days of data. If you can get your hands on the Microsoft 365 audit log, you can get 90 days of data. But this require some pretty elevated permissions: https://docs.microsoft.com/en-us/power-bi/admin/service-admin-auditing#use-the-audit-log

Anyone got any suggestions for consuming the resulting log files? I’ve managed to produce them and place them in an ADLS store. I can download one of them and “open” it in PBI-desktop, although PBI does a few steps automatically.

However, if I try to consume a log file from it’s ADLS location Power BI complains that the file is malformed. It does not seem that you have any control of the API output :/

regards

Emil

I will recommend taking a look at the solution from Rui Romano. He uses PowerShell to grab the files, but have also created some nice reports to go on top. It’s the same files, so you can let you inspire and adjust the report to your needs: https://github.com/RuiRomano/pbimonitor

Thank you! I’ll explore this alternate method.

OMG you made my day and helped me a lot with this amazing blog

Hello,

great job!!

I have done everything as you indicate and I can not connect. However, from a power bi dataflow if I access the API with the service app. What am I missing?

Código de error

23353

Detalles

The HttpStatusCode 403 indicates failure.

Request URL: https://api.powerbi.com/v1.0/myorg/admin/activityevents?startDateTime='2022-02-21T00:00:00.000Z'&endDateTime='2022-02-21T23:59:59.999Z‘

Response payload:{“Message”:”API is not accessible for application”}

Activity ID: baec6ec0-6b61-4b58-857f-33e29e35151d

This sometimes happens up till 10-15 minutes after you setup the authentication to allow your Data Factory to read from the API’s. Have you got it working now?

Hi Saulo.

I have the same issue. Did you get yours working ?

br Tom

Pingback: Extracting Microsoft Graph data with Azure Data Factory – justB smart

Pingback: How to use Power BI Activity Events Rest API with Power Automate - Forward Forever

Hello, thank you very much for this demonstration !! I’m actually having the same issue as above :

ErrorCode=RestSourceCallFailed,’Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,

Message=The HttpStatusCode 403 indicates failure.

Request URL: https://api.powerbi.com/v1.0/myorg/admin/activityevents?startDateTime='2022-07-06T00:00:00.000Z'&endDateTime='2022-07-06T23:59:59.999Z‘

Response payload:{“Message”:”API is not accessible for application”},

Source=Microsoft.DataTransfer.ClientLibrary,’

I have set up the authentification a few hours ago now so I really don’t know where it is coming from. Would you have an idea ?

Thank you very much

It actually ended up working..! I enabled the authorization in Power BI for the whole organization and not juste my security group.

Just wanted to say that this is a well-thought out, excellent alternative to struggling through powershell and other code-heavy approaches. Thank you so much for taking the time to post this – it was a big help to me today.

Pingback: Extracting Power BI tenant metadata with Synapse Analytics – justB smart

I’m unable to use any of your pipeline templates. With every one I get an “Invalid template, please check the template file.” error

That’s really strange. I have successfully imported them to multiple different tenants without any errors. I have tried reproducing the error with a zip-file, that don’t contain a template. Here I will get this error message: “Import template error, please check the zip file.” But you get an other error message? I’m a little confused.

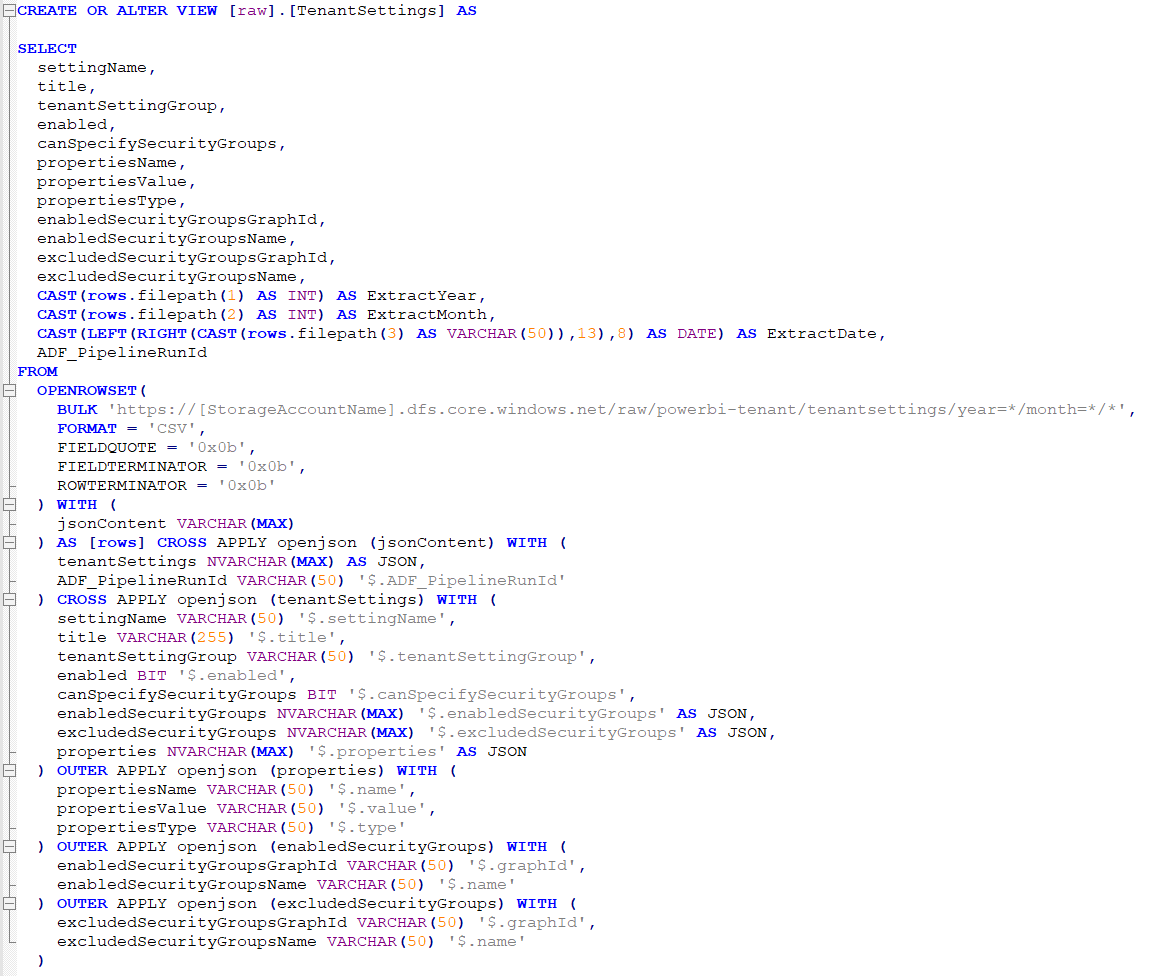

Pingback: Extract and query the tenant settings – justB smart

Pingback: Widely shared Power BI reports – justB smart

Hi,

Thank you very much for the detailed explanation and for the code. It is working great if we just configure the pipelines according to our environments.

If you could provide more details to extract data using graph API and what are the permissions required to (ADF/Synapse) MI will be more helpful.

Much appreciated for the inputs.

Thanks again

Hi Praveen,

I unfortunately dont have a solution to extract data from the graph API with the built-in Managed Identity from ADF/Synapse. I recently heard it could be possible, but you have to assign the permissions with PowerShell. It’s worth a try and could be a good blog post. Let me see if I can find the time to explore it.

For now my best advice would be to create an App registration and follow this blog post: https://justb.dk/blog/2022/04/extracting-microsoft-graph-data-with-data-factory/

Best regards

Just

Thanks for sharing the templates. If I output to json file format, worked perfectly.

If output to csv files, the content only have columns “continuationUri,continuationToken,lastResultSet,ADF_PipelineRunld,ADF_PipelineTriggerTime”.

Can the activity be outputed to csv files?

I don’t have experience with output to CSV but I would expect it should be possible. It’s a matter of doing the correct mapping of the fields. If I recall correctly you’ll have to go to the Advanced option of the mapping. Just be aware that it will be a bg task, as there is way more than 100 fields and the list is growing.

Thank you for sharing this solution.

I was trying to get the prerequisites setup by my IT-dept. But they complain I need a Tier 1 at a high monthly rate. Is that actually required for this solution?

I’m not sure what a Tier 1 is. You can run the solution at a very low monthly rate.